https://github.com/lsdlab/k8sdjango

传统部署 & 容器部署 容器部署优点:

抹平系统差异,可快速迁移部署。

docker swarm 快速实现集群部署,快速实现负载均衡,无需使用其他 load blancing 模块。

容器部署缺点:

应用与状态分离,需要挂载状态文件进入容器。

日志文件需要从容器中映射到宿主机器上,这个映射存在一定的延迟。

单机 Dockerfile 使用 alpine 镜像,镜像尺寸会小一点,用了阿里云镜像源,解决 postgresql 的 driver 问题,pip 包也用了阿里云镜像源,build 速度会快一点。

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 FROM python:3.7.4-alpine3.10 as build1 ENV PYTHONUNBUFFERED 1 ENV DJANGO_SETTINGS_MODULE conf.production.settings ENV TZ Asia/Shanghai RUN mkdir /k8sdjango RUN echo 'http://mirrors.aliyun.com/alpine/v3.10/community/' >/etc/apk/repositories RUN echo 'http://mirrors.aliyun.com/alpine/v3.10/main/' >>/etc/apk/repositories RUN apk update \ && apk add tzdata \ && apk add --virtual build-deps gcc python3-dev musl-dev \ && apk add postgresql-dev \ && pip install -U pip setuptools -i https://mirrors.aliyun.com/pypi/simple/ \ && pip install psycopg2-binary -i https://mirrors.aliyun.com/pypi/simple/ \ && apk del build-deps COPY requirements.txt /k8sdjango RUN pip install -r /k8sdjango/requirements.txt -i https://mirrors.aliyun.com/pypi/simple/ FROM python:3.7.4-alpine3.10 COPY --from=build1 / / COPY . /k8sdjango WORKDIR /k8sdjango COPY ./wait-for /bin/wait-for

docker-compose.yml 使用 docker-compose 作为编排工具,django 使用上面的 Dockerfile 作为 base image,postgresql/redis/rabbit 使用标准 image,指定版本,都是最新的稳定版,postgresql 把两个配置文件挂载进入容器,5432 端口开放远程连接,同时做了持久化,把容器的文件也映射到宿主机上。redis 只做数据持久化,不修改配置,也不开放远程连接,rabbitmq 设置用户名和密码以及 vhost,数据持久化,nginx 开放 8080 端口,代理 web 镜像的 gunicorn 8080 端口,nginx 需要挂在配置文件到容器中,同时,django 自带后台的静态文件需要映射到宿主机器中,另外 django 中自定义的日志也映射到宿主机器上。celery worker/beat 使用和 web 用一个镜像,使用同样的 image,减少 build。depends_on 配合 wait-for 能够让容器顺序启动,不会再出现 celery_worker 先于 rabbitmq 启动,连不上 broker 的报错。

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 101 102 103 104 105 version: '3' services: db: image: postgres:11.4 container_name: k8sdjango-postgres restart: always environment: POSTGRES_USER: k8sdjango_production POSTGRES_PASSWORD: b8n2maLRb7EUyv8c POSTGRES_DB: k8sdjango_production ports: - '5432:5432' volumes: - ./compose/postgres_data:/var/lib/postgresql/data - ./compose/postgresql.conf:/usr/local/etc/postgresql/11/postgresql.conf - ./compose/pg_hba.conf:/usr/local/etc/postgresql/11/pg_hba.conf redis: image: redis:5.0.5 container_name: k8sdjango-redis restart: always ports: - '6379:6379' volumes: - ./compose/redis_data:/data depends_on: - "db" rabbitmq: image: rabbitmq:3.7.17-management container_name: k8sdjango-rabbitmq restart: always ports: - '5672:5672' - '15672:15672' - '15674:15674' volumes: - ./compose/rabbitmq_data:/var/lib/rabbitmq - ./compose/rabbitmq_plugins:/etc/rabbitmq/enabled_plugins depends_on: - "redis" nginx: image: nginx:1.16 container_name: k8sdjango-nginx restart: always ports: - "8080:8080" volumes: - ./compose/nginx:/etc/nginx/conf.d - ./compose/static:/k8sdjango/static - ./compose/nginx_log:/var/log/nginx depends_on: - "web" web: build: . image: django-docker-web container_name: k8sdjango-web command : sh -c "wait-for db:5432 && python manage.py collectstatic --no-input && python manage.py migrate && gunicorn k8sdjango.wsgi --workers 5 --bind 0.0.0.0:8080" environment: DJANGO_SETTINGS_MODULE: conf.production.settings TZ: Asia/Shanghai volumes: - ./compose/static:/k8sdjango/static depends_on: - "db" expose: - "8080" celery_worker: image: django-docker-web container_name: k8sdjango-celery_worker command : sh -c "wait-for rabbitmq:5672 && celery -A apps.celeryconfig worker --loglevel=info --autoscale=4,2" restart: always environment: DJANGO_SETTINGS_MODULE: conf.production.settings TZ: Asia/Shanghai depends_on: - "db" - "redis" - "rabbitmq" - "web" - "nginx" celery_beat: image: django-docker-web container_name: k8sdjango-celery_beat command : sh -c "wait-for rabbitmq:5672 && celery -A apps.celeryconfig beat --loglevel=info" restart: always environment: DJANGO_SETTINGS_MODULE: conf.production.settings TZ: Asia/Shanghai depends_on: - "db" - "redis" - "rabbitmq" - "web" - "nginx" - "celery_worker"

运行 1 2 3 cd k8sdjangodocker-compose build docker-compose up

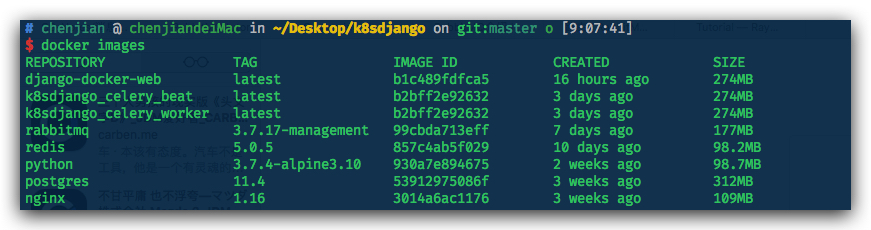

build 完成后会获得以下镜像

优化点 1 FROM python:3.7.4-alpine3.10 as build1

使用 python:3.7.4-alpine3.10 作为 build1

1 2 3 COPY requirements.txt /k8sdjango RUN pip install -r /k8sdjango/requirements.txt -i https://mirrors.aliyun.com/pypi/simple/

先把 pip 依赖复制进去,安装依赖

1 2 3 4 FROM python:3.7.4-alpine3.10 COPY --from=build1 / / COPY . /k8sdjango WORKDIR /k8sdjango

把第一步的 build1 复制出来,整个项目的代码复制到文件夹中,更改工作目录,这一步,这么做会让每次 build 镜像速度快很多,如果你没有更改 requirements 以及 Dockerfile docker-compose.yml 的话,基本上再次 build 镜像就是复制代码到容器中,其余都是用的缓存。

swarm docker swarm 是最简单的集群部署方式,docker 内置的 overlay 网络模式能够提供负载均衡,任意服务的暴露的 端口均能在任意节点访问,由 swarm overlay 网络进行 routing,最终会访问到某一个容器,同时每个服务的容器数量能够 scale up 或者 scale down。

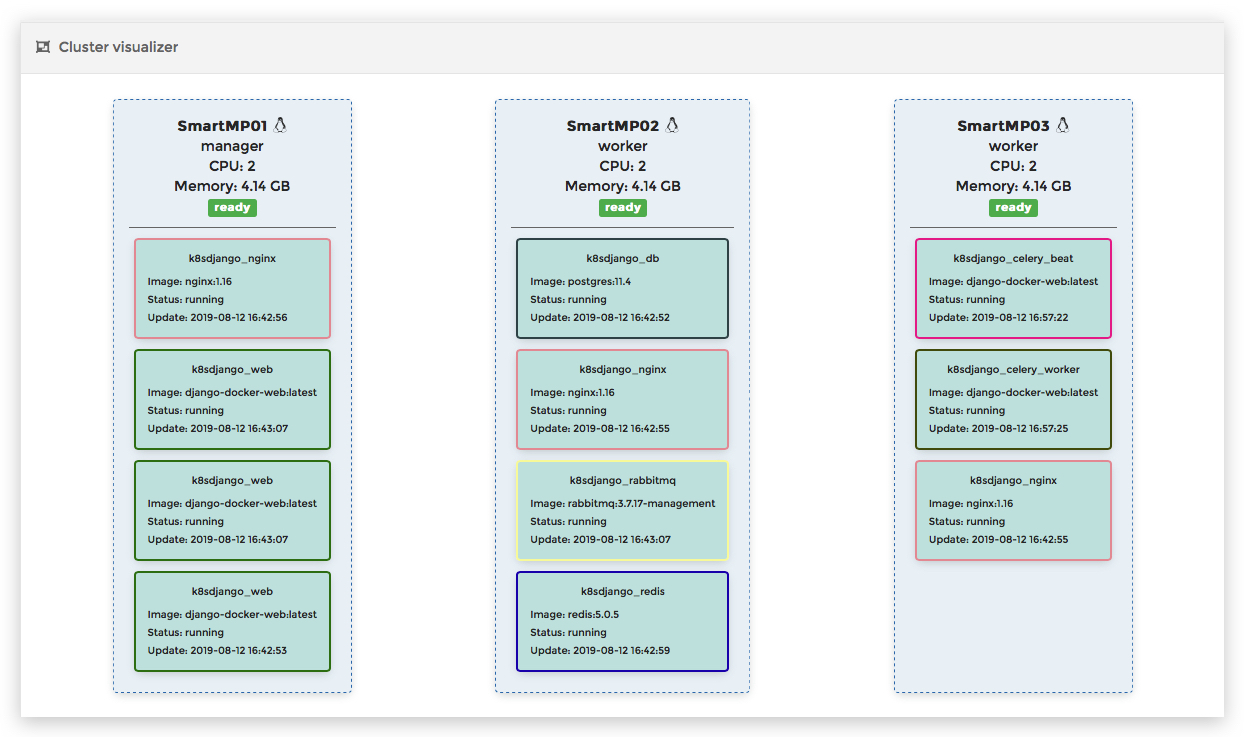

使用三台机器,一个 manager,两个 worker,使用 swarm 集群部署配置文件和单机部署区别不大,主要是设置 replicas 数量以及 placement 容器运行制定镜像,把三个数据库都制定运行在第二台机器上,web 以及 nginx 各运行三个 replicas,swarm 会自动分配,swarm这里的 node.hostname == SmartMP02 是机器 /etc/hosts 中的 hostname。

docker-compose-stack.yml 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 101 102 103 104 105 106 107 108 109 110 111 112 113 114 115 116 117 118 119 120 121 122 version: '3' services: db: image: postgres:11.4 deploy: replicas: 1 restart_policy: condition: on-failure placement: constraints: [node.hostname == SmartMP02] environment: POSTGRES_USER: k8sdjango_production POSTGRES_PASSWORD: b8n2maLRb7EUyv8c POSTGRES_DB: k8sdjango_production ports: - '5432:5432' volumes: - ./compose/postgres_data:/var/lib/postgresql/data - ./compose/postgresql.conf:/usr/local/etc/postgresql/11/postgresql.conf - ./compose/pg_hba.conf:/usr/local/etc/postgresql/11/pg_hba.conf redis: image: redis:5.0.5 deploy: replicas: 1 restart_policy: condition: on-failure placement: constraints: [node.hostname == SmartMP02] ports: - '6379:6379' volumes: - ./compose/redis_data:/data depends_on: - "db" rabbitmq: image: rabbitmq:3.7.17-management deploy: replicas: 1 restart_policy: condition: on-failure placement: constraints: [node.hostname == SmartMP02] ports: - '5672:5672' - '15672:15672' - '15674:15674' volumes: - ./compose/rabbitmq_data:/var/lib/rabbitmq depends_on: - "redis" nginx: image: nginx:1.16 deploy: replicas: 4 ports: - "8080:8080" volumes: - ./compose/nginx:/etc/nginx/conf.d - ./compose/static:/k8sdjango/static - ./compose/nginx_log:/var/log/nginx depends_on: - "web" web: build: . image: django-docker-web command : sh -c "wait-for db:5432 && python manage.py collectstatic --no-input && python manage.py migrate && gunicorn k8sdjango.wsgi --workers 5 --bind 0.0.0.0:8080" deploy: replicas: 4 environment: DJANGO_SETTINGS_MODULE: conf.production.settings TZ: Asia/Shanghai volumes: - ./compose/static:/k8sdjango/static depends_on: - "db" celery_worker: image: django-docker-web command : sh -c "wait-for rabbitmq:5672 && celery -A apps.celeryconfig worker --loglevel=info --autoscale=4,2" deploy: replicas: 1 restart_policy: condition: on-failure placement: constraints: [node.hostname == SmartMP03] environment: DJANGO_SETTINGS_MODULE: conf.production.settings TZ: Asia/Shanghai depends_on: - "db" - "redis" - "rabbitmq" - "web" - "nginx" celery_beat: image: django-docker-web command : sh -c "wait-for rabbitmq:5672 && celery -A apps.celeryconfig beat --loglevel=info" deploy: replicas: 1 restart_policy: condition: on-failure placement: constraints: [node.hostname == SmartMP03] environment: DJANGO_SETTINGS_MODULE: conf.production.settings TZ: Asia/Shanghai depends_on: - "db" - "redis" - "rabbitmq" - "web" - "nginx" - "celery_worker"

问题 **

运行 1 2 3 4 5 6 7 8 9 cd k8sdjangodocker-compose build docker stack deploy -c docker-compose-stack.yml k8sdjango docker stack down k8sdjango docker ps docker exec -it --env DJANGO_SETTINGS_MODULE=conf.production.settings f0ef1ad3c0bb python manage.py createsuperuser

此时访问三台机器中的任意一台的 :8080/admin/,都能进入 django 自带的后台页面。

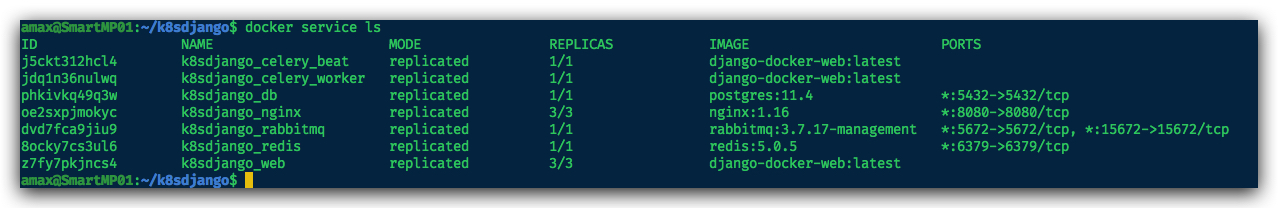

获取服务列表

https://www.portainer.io/ 是一个 Docker management UI,提供了一些功能,同时也能 将 swarm cluster 可视化,这个示例用 swarm 部署后可视化的结果如下。

serivces list 服务列表如下

优化点